Pinnability: Pinterest’s machine learning models

According to google, Pinterest currently has 70 million monthly active users with number of photos (pins) growing 75% each year being 50 billion in 2015. With tens of millions of users (pinners) interacting with those pins each day one has to wonder how you manipulate algorithms to personalize each pinner’s view, making sure that they don’t exhaust them self looking for interesting pins. Last Friday we had Dr. Jure Leskovec brilliantly explain the outlying problems and challenges Pinterest is facing such as classifying users and keeping them active. In this blog I will review Pinterest’s solutions to help Pinners finding their best content in their home feed uses machine learning methods collectively called Pinnability.

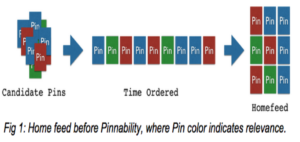

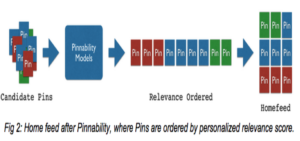

The most important feature of an app such as pinterest is arguably the home feed. A collection of pins, boards and interests designed to keep pinners intrigued and the best way for pinners to discover new content. But how does Pinterest choose one pin over the other? The answer is Pinnability, a technology powered by smart feed [1] that accurately prioritizes the possible pins to show with high relevance scores and shows them at the top of the home feed. Before Pinnability, users were always shown the newest pin from their preferred source (method shown below). But while newness of pins is a high priority for some users, that’s generally not the case.

The Pinnability models predict scores the represent the personalized relevance between a pinner and the possible pins, visualized below.

Pinnability depend on state-of-the-art machine learning models suchs as Logistic Regression (LR), Support Vector Machines (SVM), Gradient Boosted Decision Trees (GBDT) and Convolutional Neural Networks (CNN). To estimate the accuracy of those types of machine learning methods one typically needs to divide his data in two categories, one for training the model and one for testing it. Pinterest however cleverly takes advantage of the online/offline version of the software in 3 steps:

i) using a trained model to provide offline suggestions

ii) record the interactions and behavior in the offline mode

iii) test the accuracy of their prediction.

Interactions and behavior are categorized into negative and positive actions. For example a “like” is definitely a positive action but a “do-nothing” probably falls into the negative category. However one could argue that a “like” is probably not as good as “do-nothing” is bad. Those different actions are therefore scaled differently and by playing with the scaling parameters, Pinnability’s prediction accuracy can be optimized. Then with the dynamics of a particular pin’s intrinsic feature, the pinners behavior on that type of pin and the pinners documented features and interests are then used to estimate the relevancy of showing that pin. Again normalizations and weights are varied to find the optimal model. (<- a quick thought about this in [2])

When evaluating training models, Pinterest uses Area Under the ROC Curve (AUC) as a main metric. By wikipedia’s definition, the AUC is equal to the probability that a classifier will rank a randomly chosen positive instance higher than a randomly chosen negative one (when using normalized units)” [3]. Pinterest does not only use this metric because of it’s popularity in similar systems but interestingly because Pinterest has measured a strong positive correlation between AUC gain from offline testing and an increase in pinner engagement in online experiment. Their reported AUC score averages around 90% in home feed.

Pinterest is still enhancing Pinnability with each update improving the pinner engagement, for example repinner-count-increase of more than 20%. With these major improvements in building the discovery engine in the home feed, Pinterest has now started to expanding the use of their Pinnability model to improve it’s other products. We are definitely looking at state-of-the-art models and with these constant improvements one has to wonder where the limits and applications are.

[1] https://medium.com/@Pinterest_Engineering/building-a-smarter-home-feed-ad1918fdfbe3

[2] A quick thought: The first idea that pops up is probably finding a model that optimizes the general scaling of those dynamic-parameters. I wonder however if Pinterest optimizes personally for pinners and pins, weighing their own parameters and therefore (probably) estimating a more precise model. It should be quite doable but it might require processing power that really isn’t worth it.

[3] https://en.wikipedia.org/wiki/Receiver_operating_characteristic#Area_under_curve

[4] Also a very interesting article about deep learning at Pinterest: https://medium.com/the-graph/applying-deep-learning-to-related-pins-a6fee3c92f5e

4 comments on “Pinnability: Pinterest’s machine learning models”

Comments are closed.

Great post! I didn’t realize they were testing so many models all the way from Logistic Regression to SVMs to CNNs. I wonder if they are also using RNNs or LSTMs for their “memory” aspect.

MSE 238A

Thank you for sharing. I enjoy reading the applications of AUC and logistic regression in Pinterest. I have a question of using logistic regression though: Have you looked up how Pinterest prevents the problem of collinearity between coefficients? Because the coefficients become unstable when there is collinearity.

In addition, the speaker’s viewpoint toward cross validation error is enlightening. He mentioned that the difference between online metrics and offline metrics makes evaluation of the model very difficult. I think it will be interesting to explore this topic deeper. Again, thank you for the post!

This was a really interesting read. I would like to add that Pinterest primarily serves as a platform for small businesses to market to the users. More than half a million businesses have Pinterest accounts and according to Pinterest, 75% of content is generated by those business accounts. That means that a majority of the content is advertising, with Pinterest doing the targeted marketing using their ML algorithms. The situation is incredible for the businesses involved, and the users get content that they are interested in over a platform that focuses on continuous improvement.

Hi Helgi, thank you for this interesting article!

I did not know Pinterest before Mr. Jure Leskovec’s speech, and his description of this new tool and its underlying challenges on a technical point of view definitely caught my attention. I liked the way you clarified the mechanism while explaining how does Pinnability work. Now, I am convinced that the growing popularity of the site comes from the efficiency of its algorithms. I guess it is time to subscribe now!