Will Cloud Computing Make the Benefits of Artificial General Intelligence Accessible to Everyone?

Artificial intelligence plays an increasingly important role in our daily lives. Almost all smart phones are equipped with intelligent personal assistants, hospitals use computer vision to make more accurate diagnoses, customer service and sales representatives are being replaced with machines, and companies such as Amazon predict the product that you will buy next. So far, however, artificial intelligence has only been applied to specific tasks; there is no artificial intelligence that can perform a wide variety of unrelated jobs. This form of artificial intelligence that can only be applied to very specific problems is called artificial narrow intelligence; it does not reason about complex concepts or understand abstract ideas.

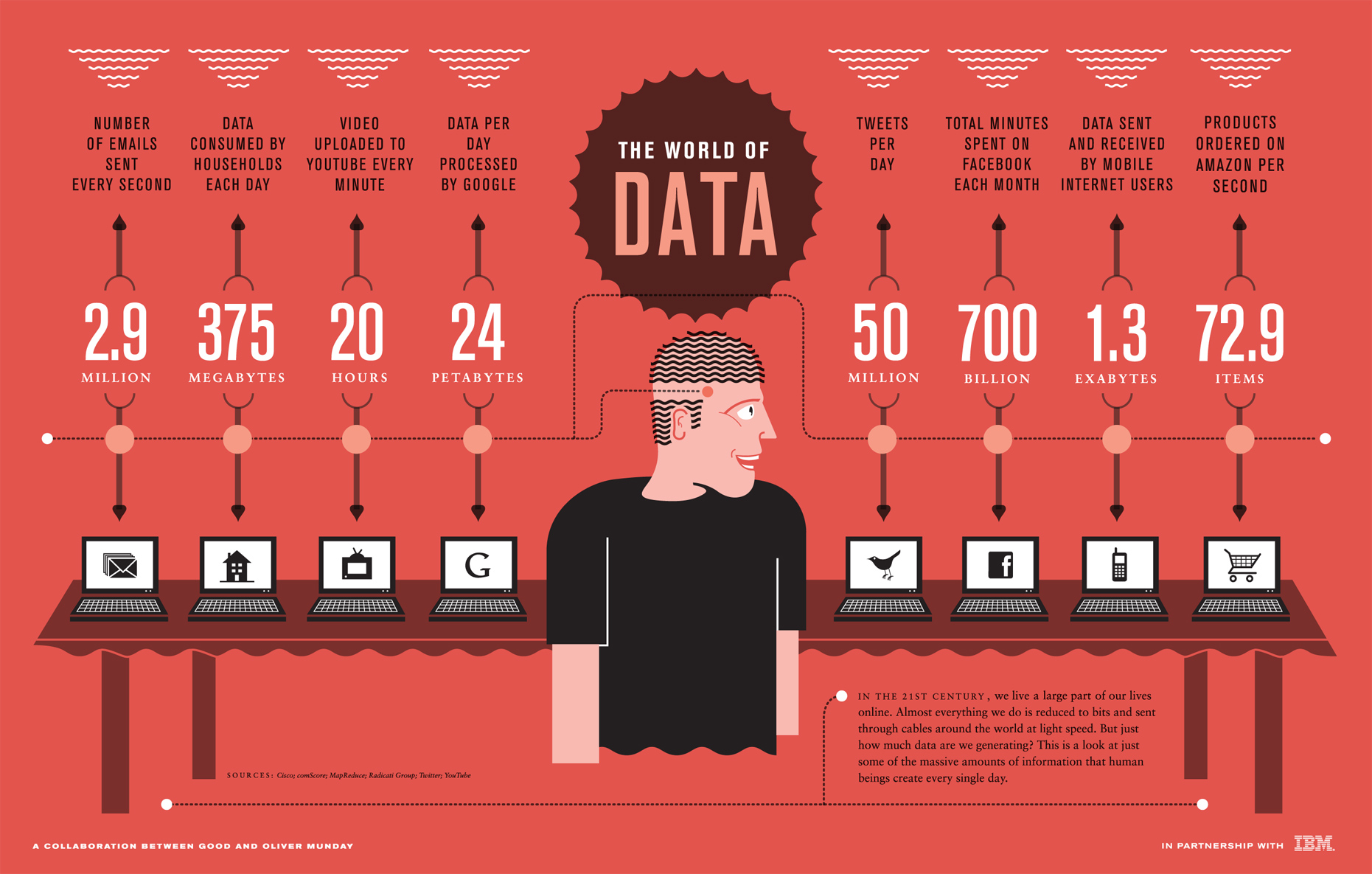

Progress in artificial intelligence has, so far, been driven by three main factors: increasing computing resources, more data, and better algorithms. As the number of transistors per square inch on an integrated circuit and the amount of digital data continue to double every two years, and researchers come up with better algorithms, artificial intelligence capabilities will likely grow significantly over the next decades (https://www.economist.com/news/business/21717430-success-nvidia-and-its-new-computing-chip-signals-rapid-change-it-architecture, http://www.eetimes.com/author.asp?section_id=36&doc_id=1330462). In a recent survey among artificial intelligence researchers, the median estimate of when an artificial intelligence will be as capable as the human brain was 2040 (http://www.nickbostrom.com/papers/survey.pdf). This type of artificial intelligence that can do most important intellectual tasks as well as a human is called artificial general intelligence.

http://www.forbesindia.com/blog/technology/data-deluge-needs-smarter-storage/

As artificial intelligence becomes more capable, more jobs will be automated and unemployment will rapidly rise (https://www.technologyreview.com/s/515926/how-technology-is-destroying-jobs/). The world economy will be increasingly dominated by a few large technology firms such as Google, Amazon, Facebook, Tencent, Alibaba, and Apple (https://www.technologyreview.com/s/608095/it-pays-to-be-smart/). As unemployment becomes more common and power is in the hands of a small technology elite, we need to consider how the benefits of new technological developments can still be made accessible to everyone (https://www.technologyreview.com/s/515926/how-technology-is-destroying-jobs/). How do we ensure that artificial general intelligence brings advantages to every member of society instead of only a handful of people?

As mentioned above one of the major factors that currently prevents us from creating artificial general intelligence is computing power. In order to develop an artificial intelligence with capabilities similar to those of the human brain we will likely at least need access to similar computing resources. The human brain is estimated to perform roughly 10 quadrillion (10^16) calculations per seconds (https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html). For comparison the world’s fastest supercomputer, the Tianhe-2 (天河二号), performs about 34 quadrillion computations per second (https://www.nytimes.com/2016/06/21/technology/china-tops-list-of-fastest-computers-again.html, https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html). The Tianhe 2 costs $390 million to build, consumes roughly 24 megawatts of power, and takes up 720 square meters of space; not exactly a device that is accessible to everyone (https://www.economist.com/blogs/babbage/2013/06/supercomputers, https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html).

http://www.businessinsider.com/ray-kurzweil-law-of-accelerating-returns-2015-5

For cutting edge artificial intelligence to become widespread we need to consider how we can best make these massive computing resources available to all people and all organizations. Cloud computing might be the solution. Cloud computing makes it possible for any organization or individual to get access to on-demand large scale computing services that can be used to run artificial intelligence program. Companies such as Amazon, Microsoft, and Google can have multiple instantiations of their artificial general intelligence run on their clouds that serve customers on their desktops, mobile phones, augmented/virtual reality headsets, or even ultra high bandwidth brain-machine interfaces (https://www.forbes.com/sites/johnsonpierr/2017/06/15/with-the-public-clouds-of-amazon-microsoft-and-google-big-data-is-the-proverbial-big-deal/#386727c22ac3, https://www.economist.com/news/science-and-technology/21719774-do-human-beings-need-embrace-brain-implants-stay-relevant-elon-musk-enters). Since artificial intelligence learns when customers use it, the artificial intelligence will get smarter over time. Everyone will be able to tap into artificial intelligence on-demand. Hopefully, in this way all members of society will be able to benefit of artificial general intelligence and perhaps even a subsequent artificial super intelligence.

Sources:

Baer, Drake. “Google’s genius futurist has one theory that he says will rule the future – and it’s a little terrifying.” Business Insider. Business Insider, 27 May 2015. Web. 12 July 2017. http://www.businessinsider.com/ray-kurzweil-law-of-accelerating-returns-2015-5

“Elon Musk enters the world of brain-computer interfaces.” The Economist. The Economist Newspaper, 30 Mar. 2017. Web. 12 July 2017. https://www.economist.com/news/science-and-technology/21719774-do-human-beings-need-embrace-brain-implants-stay-relevant-elon-musk-enters

“Fall of the titans.” The Economist. The Economist Newspaper, 18 June 2013. Web. 12 July 2017. https://www.economist.com/blogs/babbage/2013/06/supercomputers

Johnson, Pierr. “With The Public Clouds Of Amazon, Microsoft And Google, Big Data Is The Proverbial Big Deal.” Forbes. Forbes Magazine, 16 June 2017. Web. 12 July 2017. https://www.forbes.com/sites/johnsonpierr/2017/06/15/with-the-public-clouds-of-amazon-microsoft-and-google-big-data-is-the-proverbial-big-deal/#386727c22ac3

Markoff, John. “China Wins New Bragging Rights in Supercomputers.” The New York Times. The New York Times, 20 June 2016. Web. 12 July 2017. https://www.nytimes.com/2016/06/21/technology/china-tops-list-of-fastest-computers-again.html

Mittal, Ajay. “Data Deluge needs Smarter Storage | Forbes India Blog.” Forbes India. ForbesIndia, 10 May, 2013. Web. 12 July 2017. http://www.forbesindia.com/blog/technology/data-deluge-needs-smarter-storage/

Müller, Vincent C., and Nick Bostrom. “Future progress in artificial intelligence: A survey of expert opinion.” Fundamental issues of artificial intelligence. Springer International Publishing, 2016. 553-570. http://www.nickbostrom.com/papers/survey.pdf

Rizatti, Lauro. “Digital Data Storage is Undergoing Mind-Boggling Growth | EE Times.” EETimes. N.p., 14 September, 2016. Web. 12 July 2017. http://www.eetimes.com/author.asp?section_id=36&doc_id=1330462

Rotman, David. “Our economy is increasingly ruled by a few standout tech firms, and that’s not a good thing.” MIT Technology Review. MIT Technology Review, 07 July 2017. Web. 12 July 2017. https://www.technologyreview.com/s/608095/it-pays-to-be-smart/

Rotman, David. “How Technology Is Destroying Jobs.” MIT Technology Review. MIT Technology Review, 01 Sept. 2016. Web. 12 July 2017. https://www.technologyreview.com/s/515926/how-technology-is-destroying-jobs/

“The rise of artificial intelligence is creating new variety in the chip market, and trouble for Intel.” The Economist. The Economist Newspaper, 25 Feb. 2017. Web. 12 July 2017. https://www.economist.com/news/business/21717430-success-nvidia-and-its-new-computing-chip-signals-rapid-change-it-architecture

Urban, Tim. “The Artificial Intelligence Revolution: Part 1.” Wait But Why. N.p., 04 Feb. 2017. Web. 13 July 2017. https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

Users who have LIKED this post:

2 comments on “Will Cloud Computing Make the Benefits of Artificial General Intelligence Accessible to Everyone?”

Comments are closed.

Will Cloud Computing Make the Benefits of Artificial General Intelligence Accessible to

Everyone?

I believe that it already is.

AI and ML is already being used for medical research and everyone stands to benefit from it. According to Techemergance, several medical facilities are already utilizing ML in Pharma and Medicine. (https://www.techemergence.com/applications-machine-learning-in-pharma-medicine/)

In particular, Memorial Sloan Kettering uses IBM Watson for Disease Identification/Diagnosis, Personalized Treatment/Behavioral Modification, Drug Discovery/Manufacturing, Clinical Trial Research, and several others. In the future, “micro biosensors and devices, as well as mobile apps with more sophisticated health-measurement and remote monitoring capabilities, will provide another deluge of data that can be used to help facilitate R&D and treatment efficacy.”

https://youtu.be/TuxL3yzXxJo

In the article you linked to on MIT Technology Review, (https://www.technologyreview.com/s/515926/how-technology-is-destroying-jobs/) the authors stated that “rapid technological change has been destroying jobs faster than it is creating them.”

It an interesting hypothesis. I believe that AI will create several opportunities within the medical industry, but it will also eliminate several medical professionals. As cognitive abilities grow, trained professionals will still need to provide input to validate the reliability of results e.g. input in the form of a “reliability factor.” For Example, if a nurse or doctor inputs several medical symptoms into a system that utilizes AI and ML, compute provides output in the form of a diagnosis. A trained medical professional would still need to apply a reliability factor to several aspects of the treatment, whether it’s related to a symptom, diagnosis or medication prescribed. While some diagnosis might alleviate the condition the first time around, it might not always work that way. I imagine that systems using cognitive functions will be look for patterns in the data, correlate one source of data to another, and form a conclusion based its inference logic. Regardless, there will need to be several years of input allowing the system to grow.

Imagine the power of AI and what we can do with it. It can be used to find a cure for Cancer through the use of Genomic and/or Medical research, I am a strong advocate of it.

Interesting article. I also think that cloud computing will surely contribute to AI being more accessible. Currently there are some instances where AI is already being used. As Dr. Jeff Welser mentioned during his presentation, IBM Watson’s services are available for hire, and they are already being used in some places like the healthcare industry.

Regarding artificial general intelligence, I found this article that talks about Anodot, a “data agnostic” system that supposedly can analyze any kind of time series data and detect anomalies (https://www.forbes.com/sites/danwoods/2017/07/13/has-anodot-defined-the-principles-of-general-purpose-ai/#4ccd9161707e).

Even though Anodot is still labeled as “user-assisted AI”, the fact that it can analyze different kinds of data is an important step towards achieving artificial general intelligence.