The Impact of Virtualization on Data Center Infrastructure

VMWare led the industry in server virtualization technology, or the “process of creating a software-based representation of something rather than a physical one. This applies to applications, servers, storage and networks.” (https://www.vmware.com/solutions/virtualization.html). As this technology hit the mainstream data center market it allowed for a more efficient IT platform by running a fewer number of servers at a higher power utilization rate.

Those who designed and build data centers took great interest in what this new technology and how it would impact the power and cooling infrastructure. At the same time the industry was re-examining how data centers were engineered and constructed to obtain greater energy efficiencies while maintaining reliability and uptime.

The Legacy Data Center

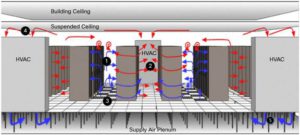

Up until about ten years ago most data centers operated as with servers placed on top of a raised floor system which was utilized for power and fiber pathways as well as the air delivery system used to maintain a very cold temperature (55 -60 degrees). Cabinet power densities were relatively low (around 2-3 kW/cabinet), and the IT cabinets saw utilization rates in the order of magnitude of 15%. Almost every watt of power in a server is rejected to the atmosphere, and needs to be cooled. Computer Room Air Conditioners (CRAC’s) were run at full speed.

Raised floor data center, Image courtesy of Cisco Systems

This resulted in very inefficient system and very high operational expenses. Data center operators didn’t care because the avoidance of thermal failure of a server and associated downtime took precedent over energy efficiency.

The Virtualized Data Center

Virtualization initially resulted in a fewer number of cabinets with higher power densities, close to 10 kW/cabinet. There was concern that in legacy data centers this would result in “hot spots”, where the existing cooling infrastructure could not handle this increased load.

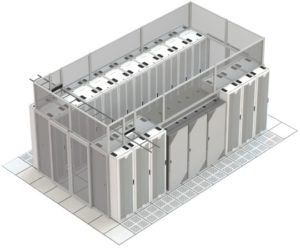

In parallel, the American Society of Heating, Refrigeration, and Air Conditioning Engineers (ASHRAE) Technical Committee 9.9 (https://tc0909.ashraetcs.org) had been establishing the best practices for the cooling of datacom spaces, which based on years of research recommended higher inlet temperatures for IT servers, from 55 degrees to up to 80 degrees. To take advantage of these allowed higher temperatures the design and construction industry utilized a solution known as “aisle containment”, which is the installation of a physical barrier to eliminate the air flow from the back (hot side) of the cabinet from recirculating and entering into the inlet (cold side) of the server.

Example of “Hot Aisle Containment”, Image courtesy of Subzero Engineering,

(https://www.subzeroeng.com/services/aisle-containment/hot-aisle-containment/)

What Happened to the Raised Floor?

As the implementation of virtualization became more commonplace and servers became more efficient and powerful, data center operators no longer feared operating IT cabinets at higher power densities (kW/cab). The evolution of data center design also questioned the need for raised access flooring as a cooling air delivery pathway as the higher cabinet power densities began to exceed the physical limitations of volumetric flow and air velocities of the perforated supply floor tiles.

Facebook recognized in 2011 that data centers are a necessity in our society, however the environmental impact was potentially a negative given the huge carbon footprint. As noted in a 2015 Business Insider article by Julie Bort, Facebook decided to go public with their designs in the Open Compute Project in an effort to push the data center industry into more efficient design and operations (http://www.businessinsider.com/facebook-open-compute-project-history-2015-6).

One of the first major data centers I had heard of without a raised floor was Facebook’s Prineville, OR facility (other data centers may have done this first, but not been as public about it). This approach of installing the server cabinets directly onto the slab while integrating aisle containment and delivering the cooling air from above or laterally into the data hall was unique. Since then it has become mainstream and I have used it many times in my own data center designs. This approach can provide airflow cooling to cabinet power densities of up to 30 kW/cabinet.

Data Center installed on Slab on Grade, Image courtesy of Facebook

(https://newsroom.fb.com/media-gallery/data-centers-2/server-room-dark1/)

Virtualization Has Changed the Industry

Virtualization is driving the industry to the model of a software defined data center, which allows for more efficient IT utilization. Inherently this would leave one to believe that potentially data centers could become physically smaller when in fact the opposite has happened. The major cloud providers are using virtualization and designing facilities capable of handling over 50 Megawatts of critical IT computing power, and High Performance Computing centers can see individual cabinet power densities in the range of 60 to 100 kW/cabinet. The engineering and construction industry has responded with new technologies and methods in cooling and power delivery based on industry research from organizations such as ASHRAE. With each new advance in IT based technology, the engineering and construction industry must evaluate it and respond with solutions that maintain both data center reliability and energy efficiency.

Users who have LIKED this post:

8 comments on “The Impact of Virtualization on Data Center Infrastructure”

Comments are closed.

Excellent post, Robert. My current employer built two centers to host wireless provider applications in my current in 2003. The centers were and are geo-redundant, and were built/cabled according to BELLCORE standards. Raised floors are not used, and cabling is segregated in overhead trays by power, fiber, broadband, cat5, etc. Racks are telecom-grade, with multiple segregated power distribution units and separate routing for “A” and “B” sides. Most telecom equipment (GMSC, MSC, STP, etc.) is -48 VDC, so the centers have rectifiers, multiple power feeds from distributed sources, backup generators, battery banks, etc., as well as a large number of security features. We have about 1100 servers in each facility. Some are simple 1u servers, while others are blade servers from various manufacturers.

My experience with blade servers in a prior life was that in a large cloud provider environment using a raised floor design, the facility operator usually spaced blade servers in separate enclosures with clearance on all sides to ensure they had sufficient airflow to the servers. There was concern that adjacent spacing at the time would overrun HVAC capacity, just as you noted.

Users who have LIKED this comment:

David – Thanks for the reply! As your team found out there is a relationship in the data center between space, power, and cooling. One of those points on the triangle is the limiting factor and caps out the data center. With the blade servers you can go with a higher individual cabinet power density, but fewer cabinets with more space in between to allow for the air flow. The other aspect with airflow is that the air velocity has to remain lower than 350 feet per minute throughout the data hall otherwise the individual server fans can’t overcome the velocity pressure gradient. We use Computational Fluid Dynamic software to validate our designs.

Great article Robert. I do believe in the concept of virtualization and software-defined data centre. What about basic users and their servers? Few thoughts about it below. Look forward to your opinion.

There is still a lot of belief among people that the existing hard drive capacity on a computer/laptop/smartphone / any hardware computing device with storage (DAS ) will Increase and drastically. Beyond that horizon, they will start dropping as network access storage (NAS or cloud) becomes the default storage methodology.

The cloud is not fast enough today, even for those with gigabit desktop connections. The typical network connection gives you 20 Mb/s upload speed and the slowest hard drives give you 150+ MB/s read i.e. upload speed. Of course, the speed will increase but are we confident to say when exactly and how much?

So, bluntly speaking, the cloud drive on the network is not yet accessible fast enough to replace the local hard disk / flash memory embedded on the computer / laptop / smartphone / motherboard / pcb. For people with much faster connections, say gigabit networks with more than 150 MB/s upload speed, yes, technically you don’t need a hard drive / flash memory anymore, and you can use the network to reach cloud storage as your default storage. However, what happens when the network is not available? We will need to have a backup solution i.e. DAS.

Users who have LIKED this comment:

Michaela – Thanks for the reply! Latency within the data center certainly is an issue in some applications, but not so much for others. This is a bit out of my expertise, but I know some enterprises have data centers dedicated to “cold storage” applications such as storing pictures or data that aren’t regularly accessed. Take a data center specifically housing EMR’s (Electronic Medical Records). My doctor only needs to access those every so often when I go into the office to see how my cholesterol is trending. That application doesn’t require the high bandwidth speed, but if they need to look at a CT scan right before a patient ends up on the operating table for something then it’s in their best interest that it’s a high speed delivery.

I appreciate how this post looks at the infrastructure required to provide the technology, it seems that this behind the scenes information is usually an afterthought, especially in the minds of the consumer.

I agree that virtualization is one of the most important innovations when it comes to the cloud as we know it today. While the technology and concepts have been around for a long time once the market and the hardware caught up it made a drastic impact on the industry.

The changes to the infrastructure housing the servers required for these services are fascinating to me. Apparently in the earlier days these facilities were embarrassingly inefficient compared to what is being built today, largely due to shifts in design focus and the realization that the infrastructure can have an enormous impact on the bottom line. It was in the news a few years ago that first Sun, then Google and others were starting to build optimized modules inside old shipping containers that contain power, cooling, servers etc so they can easily be swapped in and out and scalability becomes a much simpler issue to address.

http://www.nytimes.com/2006/10/17/technology/17sun.html

http://www.datacenterknowledge.com/archives/2009/04/01/google-unveils-its-container-data-center/

As the business booms, it seems these innovations are just the tip of the iceberg. A forward looking example would be patents google has filed for floating data centers. The idea is that cooling resources are abundant, offshore facilities save on taxes, and ocean energy can be harvested for electricity. An interesting question would be how to enable reliable connectivity with a sufficient bandwidth at such a remote and unpredictable location.

http://www.datacenterknowledge.com/archives/2008/09/06/google-planning-offshore-data-barges/

Users who have LIKED this comment:

Kyle – Thanks for the reply! Efficiency has gotten quite a bit better even over the last few years. There was an efficiency metric developed by the Green Grid (data center organization), PUE (Power Usage Effectiveness). http://www.greengrid.org. It’s the ratio of all power to the data center (lights, cooling, IT) divided by just the power for the IT cabinets. The closer the ratio is to 1.0, meaning that the power delivered to the data center is dedicated to the IT compute equipment, then the more efficient the data center operates. Over the last ten years the estimated average PUE of a legacy data center was around the 2.0 mark. Very inefficient. Now we are seeing a 50%-70% reduction in PUE values for a new build just using standard best practices. Given the size of the hyper scale cloud data centers (+50 Megawatts) that is quite a bit of savings!

You would not believe how many Data Centers I have walked into that feel like an oven. I enjoyed how you articulated the difference between Legacy and Modern Data Center Design.

As a result of how Data Center Design has changed over the years, manufacturers like Cisco, Juniper, Arista and Brocade (Now Extreme Networks) have changed hardware designs to facilitate Front-to-Back or Back-to-Front to improve cooling for both devices, but also for Power Supplies within the unit.

Whether it’s a top of rack switch like the 3850 or a Nexus 7700, understanding airflow design is very important in building a Data Center. It’s quite true that virtualized compute has revolutionized Data Center design and altered how Mechanical Engineers manage power and cooling scenarios.

A Cisco Validated Design for Nexus Data Centers

Nexus switches are highly modular and designed to be positioned with its ports in either the front or the rear of the rack depending on your cabling, maintenance and airflow requirements. Depending on which side of the switch faces the cold aisle, it’s important for fan and power supply modules that move the coolant air from the cold aisle to the hot aisle in one of the following ways:

A. Port-side exhaust airflow—Coolant air enters the chassis through the fan and power supply modules in the cold aisle and exhausts through the port end of the chassis in the hot aisle.

B. Port-side intake airflow—Coolant air enters the chassis through the port end in the cold aisle and exhausts through the fan and power supply modules in the hot aisle.

C. Dual-direction airflow—Airflow direction is determined by the airflow direction of the installed fan modules.

http://www.cisco.com/c/en/us/td/docs/switches/datacenter/hw/nexus7000/installation/guide/b_n7706_hardware_install_guide.pdf

Nexus 7700 Front-to-Back Cooling

https://image.slidesharecdn.com/hitechdaynewswitchingsolutionsdfusikv3-170420002906/95/hawaii-tech-day-new-solution-in-switching-76-638.jpg?cb=1492648159

If you were to evaluate the average Data Center or Data Centers built by smaller organizations, it’s not much more than your average MDF (Main Distribution Facility) and in many cases, some are very warm.

Cold Isle Containment refers to a strategy where Data Center designers to build an enclosed area to keep networking equipment, UCS and Blade Servers as cold as possible. This requires Front-to-Back airflow to ensure that warm air is expelled out of the cold isle. In addition to using Data Center Design and cooling strategies in networking experiment, it important to understand that Solid State Drives (SSD) run cooler than modern Hard Disk Drives (HDD) with energy saving features.

Data Center Tour

https://www.nytimes.com/video/technology/100000001766676/what-keeps-a-data-center-going.html

Users who have LIKED this comment:

Christian – A majority of data center cooling issues are due to improper air flow. We use Computational Fluid Dynamic (CFD) software to ensure the air gets where it needs to, especially in an event where you have a failure of a single CRAC unit somewhere out on the floor. I see this issue in a lot of legacy data centers and smaller server/MDF rooms vs. the new purpose built facilities. My company uses the CFD program from Autodesk, https://www.autodesk.com/products/cfd/overview. I would also say that most of the new builds I see operate with hot aisle containment vs. cold, but I am looking at one retrofit right now that would go cold aisle based on the existing layout and location of the CRAC units which have front side air return.