New Product breakthroughs with recent advances in deep learning and future business opportunities

Even though artificial intelligence was introduced in the early fifties, It has only been made attainable very recently thanks to the recent advances in deep learning, artificial neural networks, increased performance of transistors, latest advancements in GPUs, TPUs, and CPUs.

Before going over the role of artificial intelligence (AI) and machine learning (ML) in Google and Apple products which were highlighted in their 2017 keynotes, I would like to shed some light on the differences between AI and ML, also briefly explain how deep learning is combining both AI and ML and how they are opening up new business opportunities.

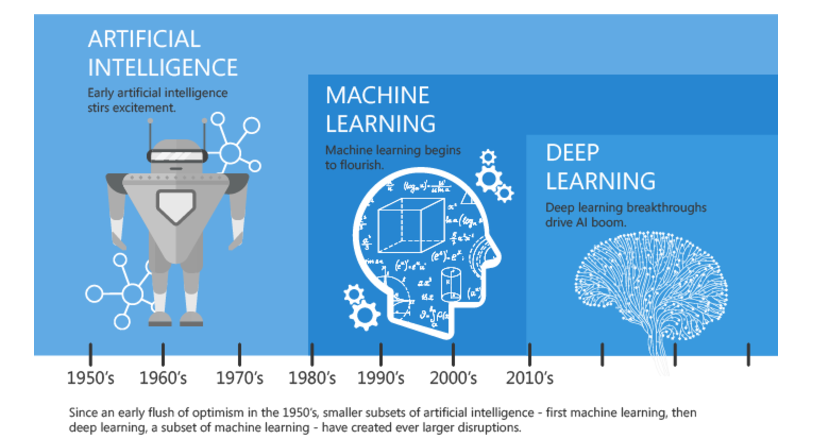

Picture 1: evolution of deep learning (Source [1])

Artificial intelligence is something that is just machine tuned to cater our requirements; Examples include PIC microcontroller based room temperature measurement and the currently popular facial recognition features in the latest ios and android smartphones. Therefore, a classic AI can perform specific tasks as instructed but it is not capable of learning on its own and program.

Machine learning is the processing of real world data using algorithms, analyzing the results and predicting the output data using multiple iterations. AI can be used to further refine or generate new algorithms to get better results, and machine learning programs can align themselves to these algorithms to get much finer granularity in results.

Deep Learning, however, uses ML and AI together to break down tasks, analyze each subtask and uses this information to solve new set of problems

One example of deep learning is the artificial neural network (ANN) which is based on an idea of how our human brain works. ANN finds common patterns from given data and predicts the best result.

With this I would like to go over keynotes of Google and Apple regarding the use of AI and ML in their new products:

Google IO 2017 Keynote, May 2017:

Sunder Pichai CEO started the keynote with “mobile first to AI first” which clearly shouts out to the world that Google is now moving towards AI.

Google has chosen three major fields where they are extensively involving themselves in research and development of AI

- Voice: Google quoted that their products are now better at voice recognition, with speech recognition error rate dropping down from 8.5 % to 4.7 % over the past year. They launched a new product “Google assist – conversation with Google to get things done in your world”. Major features of this product is speech recognition and text patterning

- Vision: Google quoted that the image recognition algorithms used in their products are better than the human capacity! Their new product “Google Lens” has a vision sensing capability that can understand what you are looking at and helps you by providing information related to that.

- Data Storage: with advancements in AI, Google has started to redesign their hardware architecture of Tenser Processing Unit (TPU). They launched their next generation of TPUs called “cloud TPUs” which is optimized for training and inference. A single cloud TPU is capable of performing 180 trillion floating point instructions, which can definitely one of the major breakthroughs to take AI to next level

Google also working on reinforcement learning approach of neural network to bring the AI across wide range of disciplines

- Healthcare – used for breast cancer diagnosis,

- Biology-Accuracy of DNA sequencing to detect genetic disease

- Chemistry – to predict property of molecules

Apple WWDC 2017, June 2017:

- Tim Cook CEO announced 6 new products, and each product has made use of AI wherever it is possible

- Apple TV – using Amazon cloud to bring more channels

- Apple Watch – Have voice intelligence, AI to track your daily routines

- 7th generation MAC supercomputer- high configuration GPUs, up to 18 core Xeon CPUs, mainly designed for use in the real-time 3D rendering, ML, complex AI simulations and analysis

- IOs 11 –

- Siri for understanding voice context

- Apple MAPS with interesting AI features

- machine learning tool (CML) provides deep neural network, recurrent neural networks

- Augment reality tools for fast and stable motion tracking etc

- IPAD- 12 core GPUs to support many AI applications

- Home pod- It has A8 chips which support real-time acoustic modeling, audio beam forming

Both Apple and Google are trying to take advantage of deep learning in their products. This definitely has a huge impact on human lifestyles. We may expect much more AI-based products from them in near future.

What can we expect in future?

Based on these keynotes, I personally feel that there are some business challenges for tech companies to deal with before bringing AI into many other fields, some of them are

- Provision of value to customers even if deep learning fails in some cases. Need for a plan B in case the whole idea fails

- Since AI and ML are complex fields, only a handful of experts are currently working on them, Companies and Universities need to educate and train people and create resources

- Need to focus constantly on designing better GPUs, TPUs and multicore CPUs in order the explore AI opportunities in various discipline

Despite these challenges, current breakthroughs in chip design industries are now building more and more power-packed hardware platforms such as Nvidia Volta GPUs, Intel K-Series i-core processors, Googles next generation TPUs and Apple workstations. All of these devices are now capable of taking on the next level complex AI challenges and refine the existing AI applications.

I feel confident that AI can answer many real-world problems, such as planning a city, constructing buildings, designing unmanned air vehicles, developing machines which can recognize human emotions. The list simply grows! This will opens up new business opportunities and will become a game changer for world economy in coming days

References:

[2] Google IO 2017 Keynote, May 2017: https://goo.gl/4vBJZT

[3] Apple WWDC 2017, June 2017 https://www.apple.com/apple-events/june-2017/

Users who have LIKED this post:

5 comments on “New Product breakthroughs with recent advances in deep learning and future business opportunities”

Comments are closed.

Good afternoon Randem Rao. This certainly was informative! You mentioned that there is a world-wide need for experts on AI and ML, and that universities and companies should now put their minds into educating people in those areas. Are universities and companies doing enough of an effort in doing so? If not, how big is the demand, or future demand, for experts on the field? Is it worthwhile for a person interested in those areas to study them?

Also, you provided us with plenty of information for two of the biggest companies in the field, Google and Apple, but what about the rest of the “tech companies”? Are smaller companies focusing on AI and ML or is it just a thing of the biggest companies?

Users who have LIKED this comment:

Hi Lucas,

Thanks for reading my blog. As we see deep learning is the fine of art which uses both AI and ML to achieve a great level of accuracy to solve a real world problem. Also, it is proven that solutions achieved by adopting deep learning have much better results compared to an average human brain! this definitely gives us the possibility to apply deep learning to many real world problems. From past few years lot of research and development is happening in this field, Universities, Tech giants, start-ups are trying to make use of AI in their respective field of interests, and collective effort are already started to educate the world, but it needs to reach more people. There are many open source machine learning tools available today such as Amazon machine learning tool, Googles Tenser tool, oryx2, Apache and much more, one can make use to learn this technology. Also, machine learning is offered across all universities.

With the advancements in chip design industry, one can now imagine implementing complex neural network which does the job better than a human brain! So we can start correlating use of deep learning in medicine, chemistry, biology, education, building better society and lot more! with this, there is a definite need for more resources in this the technology, so I personally feel deep learning have future demand.

Due to limit number of words, I could not able to mention other developments in this field. Companies like Nvidia extensively trying to use deep learning to design chips which support self-drive cars, better graphics chips to develop more realistic 3D games. Amazon data centers trying to adopt deep learning as we all know data is a crucial part of any deep learning applications. Startup companies who are working in medical, education, biology started to develop neural networks to achieve better results. And there is so much room to further improve.

Users who have LIKED this comment:

Hi Lucas – As Ramdev explained very well, these are really exciting and disruptive technologies. There is an interesting set of articles on the demand for AI talent here: https://www.linkedin.com/search/results/content/?anchorTopic=65474

According to these articles, worldwide spending on AI is growing at ~55% CAGR and the demand for talent is creating a brain drain in AL/ML/DL at universities. While many companies are implementing these technologies, there is a concern that big tech companies are cornering the market on talent. I would say that it is a great time to study AI.

One word of caution – like Daniel mentioned during our first lecture, disruptive technologies create a lot of hype, and AI is no different. A lot of people are getting carried away, so be careful and critical about what you read!

Users who have LIKED this comment:

Hi Lucas, really appreciate the explanation of the difference between ML,AI (especially narrow or weak AI) and DL as the the terms seem to be thrown around interchangeably far too often. One thing I find interesting about ML and DL compared to the styles of AI that has existed in the past is the importance of good data. Because these algorithms ‘learn’ from a data set we have seen the explosion in “big data” usefulness. However this also means that we need quality data sets to get a quality result (something that often seems to get overlooked)

http://www.information-age.com/artificial-intelligence-requires-cleaned-mastered-data-123466232/

While machine learning races ahead with many innovations and advanced hardware I am curious to see what breakthroughs will surface in data collection / classification and so on to enable truly useful end products.

I agree with the previous commenters – the post was very informative, thank you!

I would like to share some thoughts on a topic that I found very interesting: the future of deep learning. As you mentioned in your post, companies such as Apple and Google are bringing deep learning into their products and it is indeed considered as the future direction. In many cases, traditional ML problems can be solved by deep learning approaches and deep learning methods have achieved state-of-art performance in many fields. This raises a question whether there will be a place traditional machine learning in the future or will deep learning be the end of traditional machine learning.

As discussed in articles such as [1] and [2], some challenges related to deep learning include e.g. the following:

1) Reliance on large amount of training data

Deep learning methods require large training data sets, which are not available for all problems.

2) Training time

Deep learning requires a lot of time to train and test (even with GPUs), whereas traditional machine learning models can be formed with relatively small computational power.

3) Complexity

Setting up the suitable number of layers and optimizing many hyperparameters can be difficult.

4) Interpretability

Model that achieves high performance has likely found important patterns from the data – interpreting and understanding these patterns is important in many applications, such as in medical and biological problems. However, the interpretation may not be possible if the model is highly complex.

In conclusion, even though deep learning has a lot of opportunities, basic ML methods may still be more suitable for some situations. Nevertheless, it will definitely be interesting to see how the future of ML and DL will look like and how they will be utilized in upcoming products!

1) Chellappa R. The Changing Fortunes of Pattern Recognition and Computer Vision. Image and Vision Computing 2016;55:1:3-5 Available: http://www.sciencedirect.com/science/article/pii/S026288561630066X

2) Ching T et al. Opportunities And Obstacles For Deep Learning in Biology and Medicine. BioRXiv 2017 (preprint). Available: http://www.biorxiv.org/content/early/2017/05/28/142760

Users who have LIKED this comment: