Generative Adversarial Networks: The Future of Deep Learning?

While deep learning models have been able to achieve state-of-the-art results in image recognition and natural language processing tasks, their capabilities are still limited (https://www.cs.toronto.edu/~vmnih/docs/dqn.pdf, https://www.nature.com/nature/journal/v518/n7540/pdf/nature14236.pdf). Humans can learn patterns from only little data, but current deep learning models require thousands of samples (http://www.cell.com/neuron/pdf/S0896-6273(17)30509-3.pdf). Similarly, humans can succeed at a wide variety of tasks, but deep learning models are only trained on and applied to very specific problems. Consider, for example, Deepmind’s Asynchronous Advantage Actor-Critic (A3C) agent, which achieved state=of-the-art results on Atari games while requiring significantly less training time (https://www.cs.toronto.edu/~vmnih/docs/dqn.pdf).

The results seem impressive as Deepmind’s A3C agents are able to outperform human players in several Atari games. However, in reality the models fail to understand the underlying principles of the game, and when, for example, the paddle in the breakout game is moved up by a few pixels, the agent is not able to successfully play breakout anymore (https://www.vicarious.com/img/icml2017-schemas.pdf). Many of the current state-of-the-art deep learning models have such limitations, cannot cope with minor changes, and cannot be applied to a broad range of tasks (https://www.vicarious.com/img/icml2017-schemas.pdf). Generative adversarial networks have to potential to overcome some of these issues (http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf). By training neural networks against each other, the neural networks are able to learn from less data and performs better in a broader range of problems (http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf).

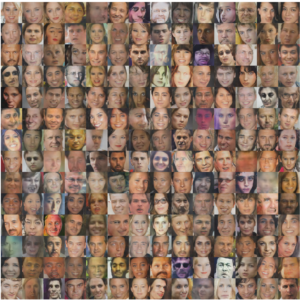

Generative adversarial networks consist of two deep neural networks. Instead of letting the networks compete against humans the two neural networks compete against each other in a zero-sum game. As both of them try to take advantage of each other’s weaknesses and learn from their own weaknesses, the neural networks can become strong competitors in a relatively short period of time. Using generative adversarial networks Goodfellow et al. were able to achieve state-of-the-art results on the CIFAR-10, MNIST, and SVHN semi-supervised classification tasks (http://papers.nips.cc/paper/6125-improved-techniques-for-training-gans.pdf). They taught one of the networks, a generator network, to create images of hand-written digits; humans were not able to distinguish these digits from real hand-written digits. Goodfellow et al. trained another neural network to create images of objects (http://papers.nips.cc/paper/6125-improved-techniques-for-training-gans.pdf). Humans could only identify these machine-created images 78.7% of the time (http://papers.nips.cc/paper/6125-improved-techniques-for-training-gans.pdf). Below are some example images of faces created entirely by using a generative adversarial network (https://arxiv.org/pdf/1511.06434.pdf).

One of the major advantages of generative adversarial models is that they can be trained effectively with less training data (https://arxiv.org/pdf/1511.06434.pdf). One of the two networks is called the generator and the other is the discriminator. The tasks of the generator is to create data samples that are so similar to the real data samples that the discriminator cannot distinguish them from the real data samples. The discriminator network sometimes receives real data samples and sometimes receives forged data examples created by the generator. Its task is to identify which data samples are real and which ones are the fakes samples from the generator.

Backpropagation is used to update the model parameters and train the neural networks. Over time the networks learn many features of the provided data. In order to create samples the generator has to get a deep understanding of the samples in the dataset; it needs to learn the features and patterns in order to create realistic forged samples. Similarly, the discriminator has to learn many of the features of the data in order to correctly distinguish the fake and real samples. By training the neural networks against each other they continue to get stronger even without much training data. The discriminator does not only need samples from the dataset, but can now also learn about the data from the fake samples created by the generator. Consequently, the deep learning models need a lot less data during the training process (https://arxiv.org/pdf/1511.06434.pdf).

Goodfellow, Ian, et al. “Generative adversarial nets.” Advances in neural information processing systems. 2014. http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf

Hassabis, Demis, et al. “Neuroscience-Inspired Artificial Intelligence.” Neuron95.2 (2017): 245-258. http://www.cell.com/neuron/pdf/S0896-6273(17)30509-3.pdf

Kansky, Ken, et al. “Schema Networks: Zero-shot Transfer with a Generative Causal Model of Intuitive Physics.” arXiv preprint arXiv:1706.04317 (2017). https://www.vicarious.com/img/icml2017-schemas.pdf

Mnih, Volodymyr, et al. “Human-level control through deep reinforcement learning.” Nature 518.7540 (2015): 529-533. https://www.nature.com/nature/journal/v518/n7540/pdf/nature14236.pdf

Mnih, Volodymyr, et al. “Playing atari with deep reinforcement learning.” arXiv preprint arXiv:1312.5602 (2013). https://www.cs.toronto.edu/~vmnih/docs/dqn.pdf

Radford, Alec, Luke Metz, and Soumith Chintala. “Unsupervised representation learning with deep convolutional generative adversarial networks.” arXiv preprint arXiv:1511.06434 (2015). https://arxiv.org/pdf/1511.06434.pdf

Salimans, Tim, et al. “Improved techniques for training gans.” Advances in Neural Information Processing Systems. 2016. http://papers.nips.cc/paper/6125-improved-techniques-for-training-gans.pdf

Users who have LIKED this post:

2 comments on “Generative Adversarial Networks: The Future of Deep Learning?”

Comments are closed.

Hi Teun – GANs really are an exciting breakthrough in deep learning! At Recondo, we are applying machine learning to a variety of problems to improve efficiency and accuracy. Some of those problems are hard to solve because it is difficult to come up with accurately labeled data. Without labeled data, there are severe limitations in what we can do with ML. We are considering using GANs to overcome this problem. What have you learned about the stability of GANs and their ability to find a true optimum? With all of the sensationalized stories about Facebook abandoning a particular experiment using GANs because the networks developed their own language (looks like they didn’t have a comprehensive cost function that penalized non-English responses), have you found any information on the pitfalls of training GANs?

Users who have LIKED this comment:

There is a lot of information about the pitfalls of training generative adversarial models in this paper by Ian Goodfellow et al. https://arxiv.org/pdf/1701.00160.pdf Perhaps you will find it useful