Emotion recognition – a deep dive into human emotions

Emotion recognition has a long body of research starting from 1960s. One of the pioneers of the field is Paul Ekman who is a well-known American psychologist who studied the relationship between emotions and facial expressions. (1) Ekman created a catalog of over 5,000 muscle movements to indicate that how small facial micro-gestures such as lifting an eyebrow or wrinkling the nose show hidden emotions. (2) These gestures where developed into Facial Action Coding System (FACS) which is a system that taxonomizes facial movements according to appearance on the face. Movements of specific facial muscles and rapid changes in facial gestures are coded into FACS which serves as a standard for categorizing expression of emotions. (3)

In 2014, the Dalai Lama commissioned Ekman to create an Atlas of Human Emotion which serves as a “map of the mind” to help people navigate their feelings and reach inner peace. (4) He started of by conducting a survey for 149 leading researchers in the field of emotions including psychologists, neuroscientists and emotion scientists to find out if there was agreement on the nature of emotions and which moods or states these emotions produce. The results indicated that there are five different categories of emotions including enjoyment, fear, sadness, disgust and anger which all have a subset of emotional states, moods, triggers and actions. Together with a data visualization and cartography company Stamen, Ekman and the Dalai Lama used the survey results to summarize their ideas and co-created the Atlas of Human Emotion to better visualize human emotion landscape and provide an interactive tool for emotional awareness depicted below in figure 1. (5, 6)

Figure 1: A diagram from the Atlas of Emotions (Ekman 2016)

The research conducted by Ekman has provided a foundation for modern emotion recognition technology which is an interdisciplinary field combining sciences such as psychology, neuroscience, biology and computer science. Recent advancements in articial intelligence and increasing computer processing power have enabled face recognition techniques become more proficient in identifying human emotions from image and video data. (2) The global emotion recognition and detection market was $6.72 billion in 2016 and is projected to grow up to $36.07 billion by 2021 with a compound annual growth rate (CAGR) of 39.9%. The market is pursued both by established technology giants including Microsoft, IBM, Facebook, Google, Amazon and Apple as well as startups such as Kairos, Affectiva, Emotient, Eyeris and Realeyes to name a few. (7, 8)

Kairos has developed a service called Human Analytics platform that lets clients include demographic, identity and emotion data to their products. Their service includes face detection, face identification, face verification, emotion detection, demographic and feature detection, multi-face tracking and face grouping. (8, 9) Amazon acquired startup Orbeus back in 2015 which it integrated into the Amazon Web Services ecosystem and launched their deep learning image recognition service Amazon Rekognition in 2016. Rekognition includes object and scene detection, facial analysis with sentiment tracking, image moderation detecting explicit content, face comparison, face recognition and celebrity recognition. (8, 10)

Google Vision API is part of the search engine giant’s Cloud Platform and is used for content analysis classifying image and video data into thousands of different categories. Vision API’s core features include explicit content detection, image sentiment analysis with face detection, optical character recognition with automatic language identification and video intelligence detecing scenes, objects and entities. (8, 11) Microsoft Cognitive Services Platform offers different Vision APIs including Face API and Emotion API. Face API can be used for image and video analysis from facial data and it’s core features include face verification, face detection, face recognition, similar face search and face grouping. The Emotion API is used for recognizing emotions in image and video data analyzing people’s facial expressions. (8, 12)

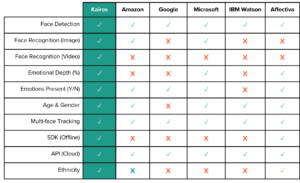

IBM Watson’s Visual Recognition API is part of Watson’s Developer Cloud which runs on IBM Bluemix cloud development platform. The Visual Recognition API uses deep learning and helps companies understand image content with face detection, celebrity recognition, object recognition and scene recognition. The Visual Recognition API also allows clients to create and train custom image classifiers using their own image collections. (8, 13) Affectiva provides emotion recognition software and analysis for image and video data. Affectiva’s Emotion SDK and API includes emotion analysis from facial expressions that is able to identify 7 different emotions, 20 different expressions, 13 emojis as well as people’s age, gender and ethnicity. The company has the world’s largest emotion database with over 5 million faces analyzed from 75 countries and provides Emotion as a Service with accurate emotion metrics. The company also offers Affdex for Market Research which helps brands and marketers optimize their digital content and media spending by understanding customer’s emotional response to their videos and TV ads. (8, 14) The different emotion recognition service providers and their list of features are depicted in the figure 2 below.

Figure 2: Emotion recognition services (Virdee-Chapman 2017)

The increasing number of established corporations and startups providing emotion recognition technology has resulted in a variety of use cases and applications in different industries. Emotion recognition is currently being applied in advertising where Fortune 100 companies use the technology to optimize their marketing content and media placement. In the gaming industry, game studios are using emotion recognition to build more emotionally aware games. Education startups are using the technology to increase their apps engagement and make learning more effective and enjoyable by analyzing student’s facial expression and emotions. (15) In healthcare, emotion recognition can be applied to telemedicine, mental health research, drug efficacy and autism support. Facial expressions can serve as indicators of mental health disorders including depression, anxiety and trauma and emotion recognition can be used to quantify emotions and design better treatment programs for patients. (16)

In finance, facial expressions and emotion recognition can be applied for financial advisors to help them better understand their customers financial needs and decision-making process with emotional intelligence. (17) Emotion recognition can also be applied in the car industry to increase car safety by understanding the driver’s and passenger’s emotional data and enhance the overall user experience. (18) In politics and journalism, emotion recognition can be used to understand better the emotions of politicians and for instance be applied to analyze presidential debates. (19) As the speed of technological development is increasing exponentially and the technologies behind emotion recognition are taking major leaps in progress, the future of emotion recognition is looking very bright helping us better understand the depths of the human mind.

Sources

(1) Wikipedia (2017). Paul Ekman. Retrieved at: https://en.wikipedia.org/wiki/Paul_Ekman

(2) Dwoskin E., Rusli E. (2015, January 28) The Technology that Unmasks Your Hidden Emotions. Retrieved at: https://www.wsj.com/articles/startups-see-your-face-unmask-your-emotions-1422472398

(3) Wikipedia (2017). Facial Action Coding System. Retrieved at: https://en.wikipedia.org/wiki/Facial_Action_Coding_System

(4) Rodenbeck E. (2016, April 26). Introducing the Atlas of Emotions, our new project with the Dalai Lama and Paul & Eve Ekman. Retrieved at: https://hi.stamen.com/in-2014-the-dalai-lama-asked-his-friend-scientist-dr-2a46f0c6bd80

(5) Randall K. (2016, May 6). Inner Peace? The Dalai Lama Made a Website for That. Retrieved at: https://www.nytimes.com/2016/05/07/world/dalai-lama-website-atlas-of-emotions.html?_r=0

(6) Ekman P. (2016). Atlas of emotions. Retrieved at: http://atlasofemotions.org/

(7) Report Buyer (2017, April 18). Emotion Detection and Recognition Market by Technology, Software Tool, Service, Application Area, End User, and Region – Global Forecast to 2021. Retrieved at: http://www.prnewswire.com/news-releases/emotion-detection-and-recognition-market-by-technology-software-tool-service-application-area-end-user-and-region—global-forecast-to-2021-300441215.html

(8) Virdee-Chapman B. (2017, January 9). Face Recognition: Kairos vs Microsoft vs Google vs Amazon vs OpenCV. Retrieved at: https://www.kairos.com/blog/face-recognition-kairos-vs-microsoft-vs-google-vs-amazon-vs-opencv

(9) Kairos (2017). Kairos Human Analytics. Retrieved at: http://www.kairos.com/

(10) Amazon (2017). Amazon Rekognition. Retrieved at: https://aws.amazon.com/rekognition/

(11) Google (2017). Google Vision API. Retrieved at: https://cloud.google.com/vision/

(12) Microsoft (2017). Microsoft Cognitive Services. Retrieved at: https://www.microsoft.com/cognitive-services/en-us/

(13) IBM (2017). IBM Watson Visual Recognition API. Retrieved at: https://www.ibm.com/watson/developercloud/visual-recognition

(14) Affectiva (2017). Affectiva’s Emotion AI. Retrieved at: http://www.affectiva.com/

(15) El Kaliouby (2016, December 29). Emotion Technology Year in Review: Affectiva in 2016. Retrieved at: http://blog.affectiva.com/emotion-technology-year-in-review-affectiva-in-2016

(16) Affectiva (2017). Healthcare. Retrieved at: https://www.affectiva.com/what/uses/healthcare/

(17) IBM (2016). Helping financial advisors understand their clients’ true financial needs with emotional intelligence. Retrieved at: http://ecc.ibm.com/case-study/us-en/ECCF-CDC12366USEN

(18) McManus A. (2017, April 11). Driver Emotion Recognition and Real Time Facial Analysis for the Automotive Industry. Retrieved at: http://blog.affectiva.com/driver-emotion-recognition-and-real-time-facial-analysis-for-the-automotive-industry

(19) Bernegger W. (2017, February 22). Behind the Scenes: How we Made the Trump Emoto-Coaster. Retrieved at: http://www.periscopic.com/news/behind-the-scenes-on-the-trump-emoto-coaster

13 comments on “Emotion recognition – a deep dive into human emotions”

Comments are closed.

Great and interesting article Toumas! I want to apply this discussion to today’s digital assistant technologies (Siri, Alexa, Google Assistant, etc.).

Do you imagine the concept of emotion recognition being seen in these voice-activated devices/applications? For example, if I raise my tone when speaking to Siri, she could be programmed to acknowledge my change in emotion and use a more tender voice and choice of words for assisting me.

Moreover, what if these technologies explore the use of a camera to view their user’s face and understand their emotions based on the said small facial micro-gestures that indicate different emotions in us. I’m sure these upgrades would raise the level of assistance that these technologies can offer!

Users who have LIKED this comment:

Hi Jared,

Thank you for your comment!

When I was looking for material for this post, I stumbled upon this article https://www.technologyreview.com/s/601654/amazon-working-on-making-alexa-recognize-your-emotions/ – which shows that Amazon & co are using voice recognition to train their assistants emotional awareness. Also https://www.cbinsights.com/research/facebook-emotion-patents-analysis/ was a very interesting piece on Facebook’s new patents relating to the micro-gestures.

Hi, first of all, I am blown away by this post. I found it fun to read as well as being easy to understand for someone with no knowledge in the field of psychology and AI. I connected most with the multitude of how this technology could be applied to different devices you presented to us. Thinking of an application that could read my facial expression and then assume what mood I was in is outlandish. I read a Time article (http://time.com/14478/emotions-may-not-be-so-universal-after-all/) that explains emotion can be cultural and actually very personal. For instance, the article describes the process of collecting images from all different cultures where people were shown smiling and these emotions were elusive to the investigators that sought to pin down a specific answer as to what emotions were being personified by the facial expression. Westerners’ smile expresses a range of emotions from wonder, laughing, to happiness which differs in meaning for a tribe of people in Namibia. But perhaps tribes of people in Namibia that have no connection to society would not be using these devices with facial recognition software. I just agree with the Time’s article that culture has a large influence on how we convey our expressions. Ultimately, my question I guess is how universal are emotions?

Users who have LIKED this comment:

Hi Foster,

Thank you for your comment!

I agree on your view and what the Times article talks about how culture influences the expression of emotions. However, the notion of how well humans are able to detect other’s emotions have been challenged and argued that machines would do a better job via facial recognition. Here is a fun video on human vs. machine featuring Kairos that was one of the companies in the post https://techcrunch.com/2017/05/29/judah-vs-the-machines-kairos-face-recognition-ai-can-tell-how-you-feel-but-does-it-know-what-you-dream/

A nice, excellent article on using image recognition technologies to detect human emotions. I think there are already a lot of pictures online that have people in different emotions. So if you have an algorisms, it would be easy since there’s already a broad cross-section that you could use to train your model. I think there could be apps that use this type of emotion recognition technology to determine the emotion of the people in that photo shot, and then could label this picture or match it automatically with a background music that will have the same style as the picture. (Like a video that shows happy faces could be processed with a happy background music). It would be beneficial if the machine could understand what humans are thinking, based on emotions.

Class: Su17-MS&E-238A-01

Users who have LIKED this comment:

Hi Shunzhe,

Thank you for your comment!

Affectiva which is discussed in the article has gathered a large emotion database http://blog.affectiva.com/the-worlds-largest-emotion-database-5.3-million-faces-and-counting. and Kairos has listed 60 more https://www.kairos.com/blog/60-facial-recognition-databases. Using the emotion data and use it to activate matching music is a interesting idea and seems that some people have done research on it https://ijircce.com/upload/2017/february/205_Emotion_NC.pdf (disclaimer: not familiar with this journal)

Users who have LIKED this comment:

Hi Tuomas! Such an interesting topic!

As a technology journalist and passionate person about emotions myself, I had the chance to interview the creator of Neuro Linguistic Programming Richard Bandler. I wonder how NLP can be used into this system you describe and as far as Bandler said, it is already being used by Intelligence Agencies, Governments and Main Technology Companies, though it is not generally known.

My article was published in Spanish and will be published in English soon, moment when I will share it. Meanwhile here is a link to a Richard Bandler Interview by someone else to get an idea of NLP and its advantages in “thinking on purpose”, considering that if we change the way we think, it changes the way we fell and it changes what we can do.

https://www.youtube.com/watch?v=PBXM431_8bM&t=1562s

Warmly,

Meli

Users who have LIKED this comment:

Hi Meli,

Thank you for your comment!

I’m a big believer in multidisciplinary science and there must be a number of ways to use NLP together with emotion recognition based on facial data. It’s worth exploring the different APIs mentioned in the post and explore if they would offer ways to be used in NLP. Here is an additional set of APIs http://nordicapis.com/20-emotion-recognition-apis-that-will-leave-you-impressed-and-concerned/ that might prove useful. Besides Face recognition, I would do research on text recognition and speech recognition on how they would benefit NLP research. Amazon is one company that is looking into understanding emotions better via speech data https://www.technologyreview.com/s/601654/amazon-working-on-making-alexa-recognize-your-emotions/

Hello Tuomas,

Your post was very interesting. I really think mental well-being is a very integral part of our health that unfortunately is not always paid attention too. I was surprised that the Atlas of emotions only considered one positive feeling – enjoyment – and believe there is a greater spectrum for them; positive emotions can help us overcame the negative spectrum and are just as important. I am looking forward to emotion recognition helping us treat patients or improve our state of minds in general.

But back to the IT application of emotions, I checked out the Affectiva website from your references. I saw that they were having Siri and Alexa respond to the user emotions. I thought that was super cool; I mean how many times have we called our computers stupid, even though they help us on a daily basis. I think it would be a very convenient feature for the users and would make the sales increase substantially.

Looking forward to the technology,

Sara Dabzadeh – MS&E 238A

MS&E 238-A:

Tuomas,

This was a super fun article to read Tuomas! To start off, I find it interesting how world leaders, today, are working with emerging technologies such as emotions tracking and recognition! It’s also interesting to see how each of these world leaders seem to apply the same technology for different purposes.

I’m going to approach this topic from the opposite direction. Just with any new power, there is always going to be people who use it for the good, and then some who use it for the bad. If ad agencies are using facial and emotions tracking to improve their viewing body, it can be said that such technology could also be used to win elections all over the world! Today, elections are more about big data and emotion recognition than anything else. Election strategists collect information before hand about the crowd a politician is going to be addressing and use this information to draft a more convincing speech to win votes. In other words, big data is being used in elections to manipulate people to vote for a particular candidate. Similarly, emotions recognition today is becoming another popular and powerful tool to study how people react to what an election candidate has to say, and by understanding their reactions, election campaign strategists all over the world now come up with hand crafted speeches to gain peoples’ loyalty. Could we then safely say leaders all over the world tell you what you want to hear and not what their actual vision and motives for their people are? Is it fair, on this front as a voter to be manipulated through the use of such tactics? What happens to the candor of our social construct? Is it slowly crumbling to a point of no return? And how does one control the abuse of such a technology whose impact on society is magnanimous?

Hey Tuomas,

I must say excellent post. Great summary of the technology, the major players and the current applications and future potential. I was looking at your graphic on emotion recognition services and its clear that Kairos is the preferred or “superior” offering based on functionality…that being said having the most features and functions does not necessarily make it the best. Is there a particular reason beyond features and functions why its the best? What does the accuracy look like? What types of emotions can it recognize and what is the breadth?

One thing that wasn’t mentioned in the article was the use of speech recognition in conjunction with image recognition to aid or enhance the accuracy of emotion recognition. Do these start-ups use vocabulary or tone of voice to improve their emotion recognition results? I thought your section on applications was very well done, the ability to capture ones emotions without them actually having to express them or communicate them verbally is a huge opportunity. It’ll definitely give companies the ability to improve the overall experiences of these products.

Another interesting (and potentially dystopian) use for emotion recognition technology is in the security realm. There is a lot of interest from organizations like the Transportation Safety Administration (TSA) and the Department of Homeland Security/ Department of Defense on developing and implementing these technologies for use at airports, border checkpoints, military bases and other potential targets. While TSA agents and customs personnel have long been trained in reading and monitoring the emotional and mental states of those passing through their checkpoints, the potential for these systems to do the work more effectively and efficiently could very well transform the security services provided. There are numerous privacy challenges with a technology like this, and the potential for abuse by governments is incredibly high across the globe. Despite this, I think we will see these technologies coupled with wider machine learning/ AI monitoring technologies proliferate over the next decade in these fields.

Thanks Tuomas for the enlightening article! This whole topic is super interesting! I agree that emotional recognition has a massive potential for the future!

“With great power comes great responsibility.” (Uncle Ben in Spider-Man) Of course there is a lot that humankind can gain from emotional recognition services and technologies but it opens as well a whole lot of ethical questions that no one has the answer to yet. How far can you go without invading privacy for example? We have reached a point where neither religion nor human rights provide explicit values to follow. So it is our responsibility to define precisely which guidelines we want to follow using this potentially magical technology in a responsible way that could have an impact on everybodys life.