Two Tech Behemoths, Two Different Approaches

The way users interact with computers has steadily evolved over time and is arguably on the cusp of another inflection point. Our relationship and interaction with computing took-off with the advent of the keyboard, mouse and graphical user interface (GUI). It then shifted towards mobile with the introduction of the multi-touch screen. In an AI first world, the user interaction model is shifting towards a more natural and immersive experience centered on voice and vision. Having watched the Google IO 2017 Keynote and the Apple 2017 WWDC, we came away with the following:

Google IO Takeaways [1]

Google’s core mission is to organize information and make it useful. At Google IO, Google introduced a whole host of products and services. Everything ranging from Google Lens (AR tool that can help you understand your environment) to Google Assistant (AI assistant that interacts with you to get things done). Powering these products and services are four core competencies:

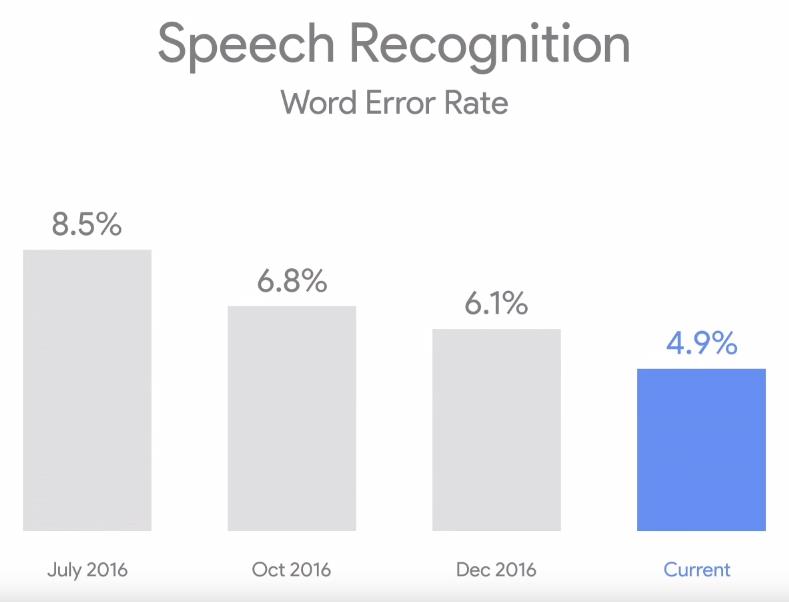

- Speech recognition has continued to improve with word error rates dropping 3.6% from 8.5% in July 2016 to 4.9% today. Driving these improvements are advancements in deep learning and neural network adaptive beamforming (NAB) techniques. Shortcomings of previous speech recognition algorithms were that filters learned during training were fixed for decoding and had limited ability to adapt to unpredictable or dynamic conditions. [2] Google’s new neural beamforming techniques have enabled Google to pinpoint and identify multiple users in noisy environments with less hardware and provide them each with customized experiences.

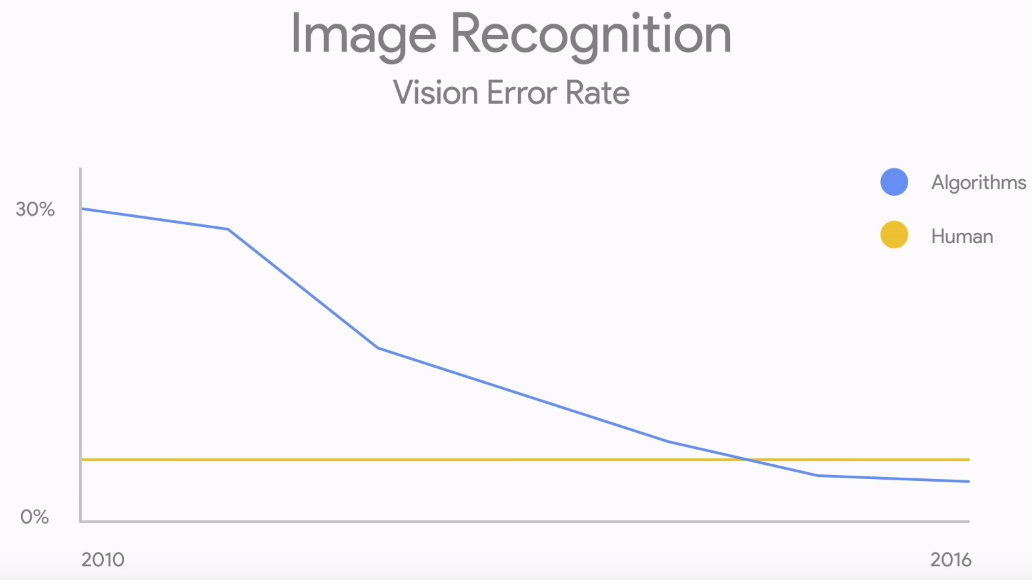

- Vision recognition is now able to understand attributes behind images and the image recognition error rates have surpassed humans. Through advances in machine learning, Google has been successful in applying convolutional neural networks to successfully analyze and predict visual imagery. [3]

- The computational architecture for data centers have been redesigned and optimized for AI. Google unveiled the original Tensor Processing Units (TPUs) last year that only worked for “inference” (processing data). The 2nd generation TPUs have the capability of “inference” and “training” of data. Internal tests have shown TPUs have cut computing times in half compared to commercially available GPUs. By developing a new chip that delivers faster processing, Google is able to process large unused data sets that were previously ignored due to high computing costs. This means better-trained and accurate AI capabilities. [4]

- Designing machine learning models is time consuming and difficult. Google wants to help developer’s use machine learning. Their approach is coined AutoML, essentially using neural nets to design better neural nets. This process is accomplished through the use of reinforcement learning algorithms that introduces a “child” model and then train and evaluates it based on characteristics of the required task. By repeatedly using neural nets to generate new architectures, testing them and providing feedback, AutoML is able to optimize and select candidate neural nets best suited for the task. [5]

![AutoML [3]](http://mse238blog.stanford.edu/wp-content/uploads/2017/07/3.png)

Apple WWDC Takeaways [6]

Apple’s core mission is to bring the best user experience through innovative hardware, software and services. The focus of WWDC was in four areas:

- Platform updates were announced across iOS, macOS, tvOS and watchOS, all due this fall. All improvements were incremental and focused on simplifying the user experience. Some features that were highlighted were person-to-person payments via Apple Pay, a redesigned App Store, cross-device syncing of on-device machine learning and iPad multitasking capabilities.

- Product updates for its Mac and iPad portfolios emphasized better displays and improved specs. A high-end iMac Pro aimed at professional users and video developers was previewed.

- A high-end smart home speaker was introduced that demonstrated voice-driven interaction powered by Siri, high quality acoustics and quintessential Apple design.

- AR/VR capabilities were shared throughout hardware and software updates and mostly focused on giving developers better tools to leverage product capabilities. The highlight of the conference was the demonstration of an augmented reality game, which used an iPad.

Final Impressions

Both companies outlined different approaches to positioning themselves for an AI first world built around a more natural and immersive experience centered on voice and vision. Both companies played to their strengths. Google continued to build on their expertise in AI and ML and launching services around these data focused competencies. Apple focused on delivering incremental product focused improvements across their entire product line-up to strengthen their integrated ecosystem. At first blush it appears Google’s strategy casts a wider net with more accessibility to capture and process data driving smarter interactive services while Apple remains more focused on the user experience and ecosystem.

[2] https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/45399.pdf

[3] https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/

[4] https://www.bloomberg.com/news/articles/2017-05-17/google-to-sell-new-ai-supercomputer-chip-via-cloud-business

[5] https://research.googleblog.com/2017/05/using-machine-learning-to-explore.html

[6] https://www.apple.com/apple-events/june-2017/

Users who have LIKED this post:

2 comments on “Two Tech Behemoths, Two Different Approaches”

Comments are closed.

When I think about speech recognition and the impact that Google and Apple have had on our lives, home automation comes to mind as part of the voice driven immersive experience. Amazon is leading in this with Amazon Echo and Alexa, and Google also offers the Google Assistant. I read recently that Apple, too, is coming out with HomeKit, a platform controlled by Siri which can interact with and control home appliances (1). HomeKit is expected to come out with over 100 devices that it can control, just this year. It is fascinating to see the great strides made in this area as voice recognition algorithms improve, and the possibility of a future where all functions of our daily life could be voice driven.

1. https://www.cnet.com/news/everything-siri-can-control-in-your-house/

Hey Ushana, thank you for flagging these other products and I’m definitely cognizant of other competitors particularly Amazon that has been at the forefront of many of the technologies. I spent time going through both the Google I/O and Apple WWDC 2017 and what struck out to me is the fact that both companies are really starting to entrench behind their core competencies and expertise. What concerns me with some of the announcements that Apple has come out with are rumblings that they are falling further behind in terms of capabilities for Siri even though they were the first to market with a mass market voice assistant. The name of the game is clearly data at this point and Apple is definitely falling behind Google in this area. Anyways, thanks for the comment.