Where Eagles Dare

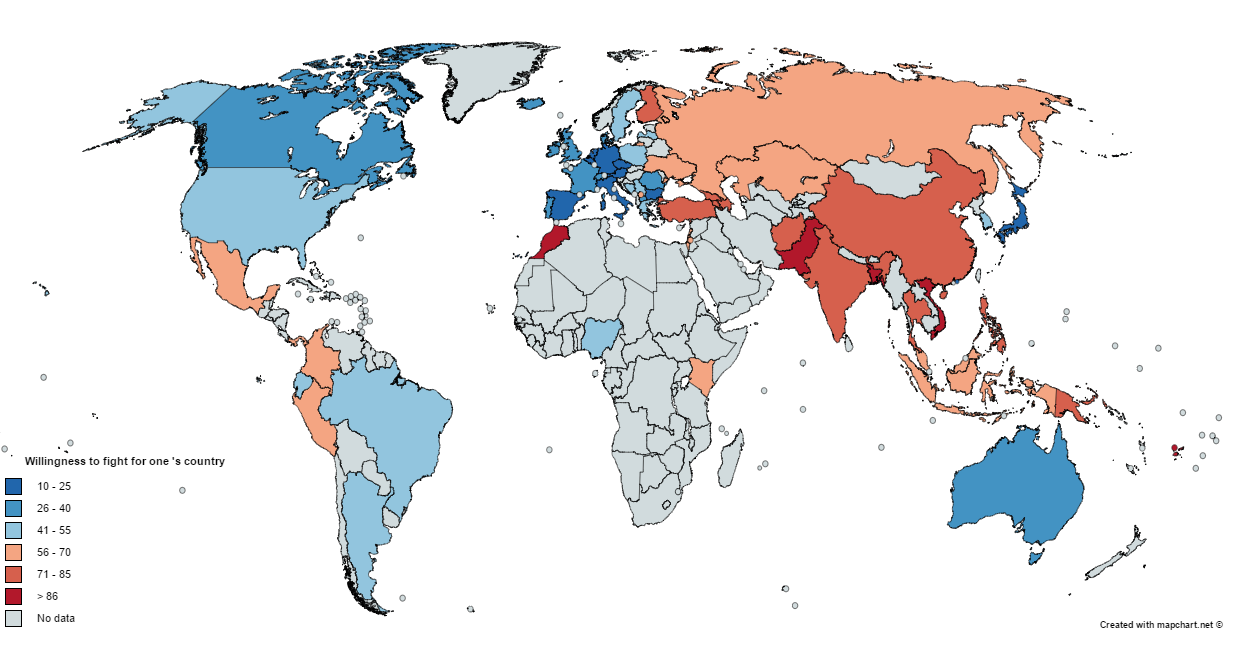

Automation is starting to impact more and more fields of expertise were humans were considered to be essential: driving cars, working in a factory, serving food and, lately, the art of killing one another. As the era of mass warfare came to an end, the population of western countries became increasingly unsupportive with the idea of massive casualties; war became asymmetrical, and there are fewer incentives to fight in a non-defensive war. 33 years ago Stanley Kubrick started filming Full Metal Jacket; the idea of waging a war like the Vietnman War seems improbable today.

With fewer men on the field and a strong public opposition to military deaths (and a not so strong opposition to military spending), it isn’t a surprise that military commands in the whole world started to invest and research new ways to wage war. The usage of unmanned vehicles have now has become commonplace in militaries in the whole world.

Military UAVs like the recently retired General Atomics MQ-1 Predator, one of the most common military drones used by the US military, are remotely controlled by a drone operator safely positioned far from the front line. This connection to a human operator is one of the weaknesses of this class of armaments since the disruption of this signal (by saturation of the satellite uplink with junk data, or a hacking attack) can render the drone virtually useless or impair its ability to react to an ever-changing combat situation (the satellite delay is already a problem for military drones); furthermore, in the remote event of a full-scale war against an opponent of a comparable technological level, the satellite constellation can be put in danger itself by anti-satellite plane-carried missiles or by the usage of nuclear weapons in the low orbit.

Another issue with current drone technology regards long-duration surveillance missions: modern drones produces terabytes worth of surveillance data, with not only can become difficult to transmit and store, but also difficult to properly analyze since most of it isn’t useful for the mission. An AI could easily analyze this data without needing to broadcast them to a human operator, understanding what’s happening on the battlefield and then contacting the HQ for further instruction.

To solve and prevent these issues the global military industrial complex is moving towards semi or fully autonomous war machines. AI-assisted drones could act as the ultimate force multiplier for the human soldier of tomorrow, providing virtually constant surveillance, destruction of targets of opportunity, and in general assisting the pilot, sailor or infantryman on the battlefield of the future. Furthermore, military AIs could still carry on their mission even if their link to the military chain of command were to be severed (fulfilling a role similar to the Soviet’s “dead hand” doctrine), acting as a deterrent against attempts to behead the military leadership; it would be impossible for the enemy to find and destroy every single autonomous AI ready to carry on retaliatory strikes.

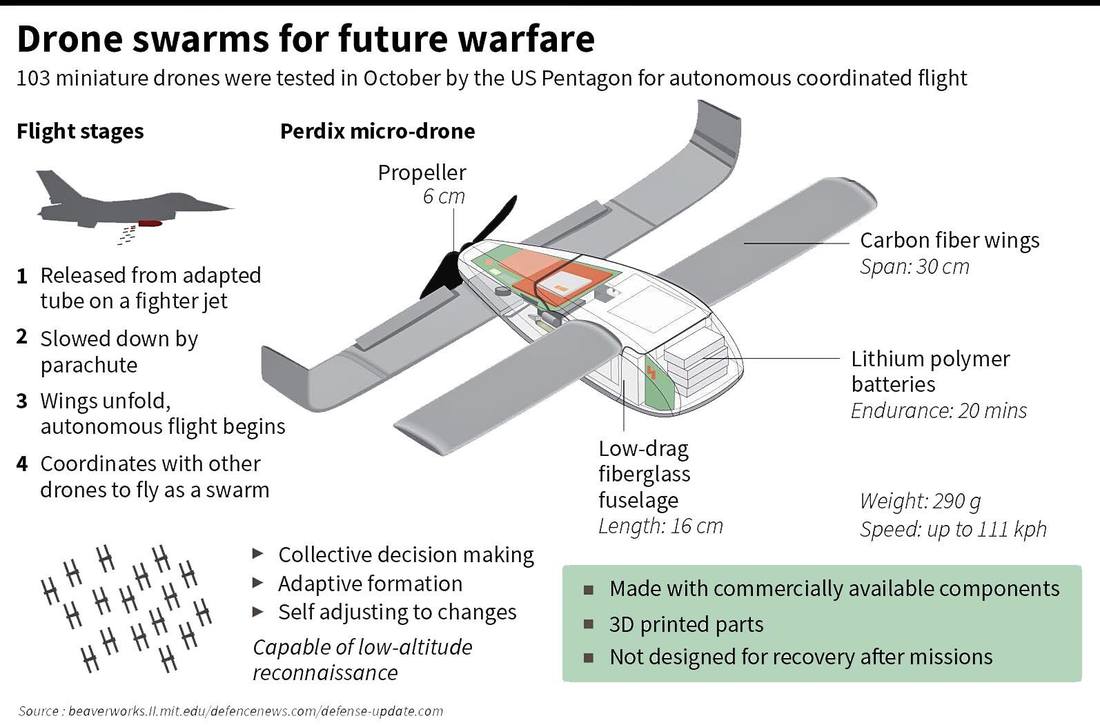

AI-reliant companies and startups already see defense investments as an opportunity, despite Google leaving Project Maven (a machine learning platform for military drones) [1]. A very interesting project being developed by the US military is the Perdix [2]. The Perdix is an unarmed drone that operates in a swarm of several dozens of units; one unit is a cheap and expendable UAV not bigger than a hand, but in a swarm, Perdix can scan an area, identify enemy combatants and priority targets (using face recognition technology) and ask for permission to guide a missile fired for a secondary platform (which could be a missile boat or a bomber several kilometres away) right on target; the Perdix swarm can also act as a defensive equipment, acting as a decoy to defend a friendly fighter from enemy guided missiles or scout the terrain to protect friendly infantry from ambushes.

The advantage of using a swarm of cheap drones instead of a single, more expensive unit is obvious for the military since a swarm of small, independent AIs is much more difficult to identify and disable in a single hit.

In the short to middle term AI weapons will require human assistance in fields like target discrimination, priority engagements, and attack authorization, (mostly for political concerns) but in the future, fully autonomous AIs could be used in situations where required human presence can be detrimental to the success of the mission (like in high-speed air engagements, submarine warfare or operations in low satellite coverage areas – which includes areas outside of Earth’s gravity well).

Sources:

[1]: www.gizmodo.com/google-plans-not-to-renew-its-contract-for-project-mave-1826488620

[2]: www.cbsnews.com/news/60-minutes-autonomous-drones-set-to-revolutionize-military-technology-2/

7 comments on “Where Eagles Dare”

Comments are closed.

Inputting algorithms on accurate and precise interpretation of the real-time data gathered by the drones themselves are the premises for safe flights and missions. In order to do that, cooperation with experts from different fields with the engineers are required for better revision of the parameters of drones.

Users who have LIKED this comment:

As we saw during last Friday’s very interesting presentation, there are multiple possibilities for R&D relationships between the DoD and the private sector. A very interesting environment for VCs to be in

This is very interesting and very scary at the same time. On one side eliminating the need to have soldiers in the battlefield engaging extremely dangerous situations is a win for everyone. On the other side, leaving the decision making to an individual based on processed data by an AI algorithm is scary in so many ways. On business, we’re all used to making tough decisions with partial data, a senior manager does this every day, the main difference is typically this are not life-death decisions which can change families and societies on the long term. The evolution of this will be interested to watch as tests are performed and decision makers are pushed to use the new technology quickly as a justification for the huge investment is needed.

Users who have LIKED this comment:

I didn’t think about taking a business approach to this topic; it’s very interesting now that you made me reflect on it. Something like the sunk cost fallacy could be very dangerous if we start using weaponized AIs. It raises some interesting questions regarding accountability as well

I still think however that at least for the short-medium term there will be some serious constrictions on how free to engage armed AI drones can be, mostly for ethical/political concerns. I wouldn’t want to be the CEO of the first AI company whose drone just shot a bunch of civilians!

I don’t think that the concerns are just political. I think there are real concerns currently regarding the claims of this technology such as facial recognition that call into question the appropriateness of using these tools in situations that would require such reliance on AI. In the area of military warfare, the precision and reliability of these tools should be exceptional prior to full deployment. Unfortunately, there is little insight into how these decisions are getting evaluated.

Unfortunately, most of the research and the data on the effectiveness of these weapon systems is probably classified. Do you think there’s a risk of abuse of this kind of technology (such as failures or mistakes kept secret for military reasons, for example?

This is just another example of how big of a role the military has played historically in the advancement of science.

It just makes one wonder whether such advancements would be possible in a peaceful and pcisfist world.