Core Cloud to Edge Cloud: It will take Time

Cloud/centralized computing (CC) has had a profound impact in our lives whatever your standpoint is. For the end user, it has allowed a whole new breed of digital applications to be provided and tons of data to be accessed in a global, cheap, and quasi-immediate fashion. For business and enterprises, CC has enabled the implementation of previously impossible business models, such as “pay as you go/grow”, knocked down the barriers to market entry for software-fueled services, or made possible the execution of highly complex data analytics for a diversity of purposes including HR management, product de-risking, or financial healing. For developers, who are now able to build plenty of pilot projects and pursue their full development without requiring astronomical upfront investment in compute power thanks to platforms like Amazon Web Services, or Microsoft Azure. Or for telecom network providers themselves, who now can implement precise and fast-dynamic resource allocation while supporting multi-vendor multi-technology and multi-tenant environments; thereby compressing costs and improving communication reliability. All of this in about a decade, from nothing to an explosive highly-scalable $50+ billion economy

Now, what if this is not enough? What if I told you that this model is, essentially, not future-proof?

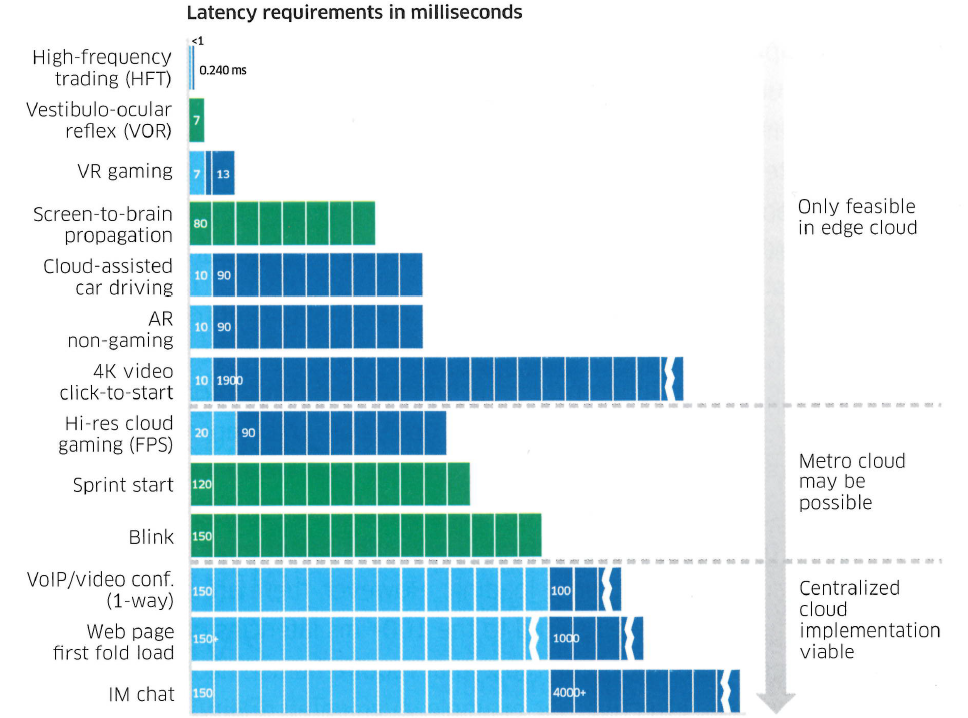

Dean Paron – Azure’s director – said it himself, Microsoft is already looking into the next generation of cloud, the Edge Cloud (EC); which in short, aims for enhancing the centralized approach with the ability to control time. Why? Because the way people and things communicate is evolving fast, and with it, the service requirements whereby current data center architectures become progressively inadequate. The figure below supports this point, a roster of present and future applications and their corresponding latency requirements (latency/delay = time that it takes to complete communication).

A quick analysis reveals a major point: if we want 16K video streaming, if we want cooperative robotics, self-driven cars, high-frequency trading, industry 4.0, augmented reality, virtual/multidimensional gaming, on-demand anything, real-time automation, multi-port domotics…if we even want 5G to become true, the concept of cloud computing & networking must evolve from purely transactional – i.e. storage, video, search, messaging, unicast streaming, to tactile and immersive. And again, it all boils down to time, its control, and its compression. Now the real question is: what is the strategy of the telecom and cloud industries to achieve so?

From centralized to distributed (edge) cloud.

The first action to address the new immersive and mission-critical applications is, therefore, to place data and processing as close to the user as possible, or in other words, to migrate from centralized processing on massive-scale “core” data centers to distributed topology, featuring user location awareness and pro-active resource allocation. This trend, which has been ongoing for the last years (around 60% of all cloud infrastructures will be Edge by 2015), is grounded on four major reasons; first:

>> Speed of light : 299 792 458 m/s;

>> Average refractive index of standard singlemode optical fiber core: 1.5;

>> Target one-way transport latency: <0.5 ms (e.g. HFT, Cloud RAN, or distributed micro-services)

>> Maximum tolerable distance from Data source to User/EndPoint : (299 792 458/1.5)*0.001 =<100 km

As simple as fundamental physics, the DC needs to be really close to the user (100km or less vs 1000s of km currently) to serve the new generation of latency-sensitive applications. And even for not so sensitive applications but still requiring some form of real-time human-machine interaction, latency beyond 100ms will make of any real-time human-machine perceptibly lagged.

Second motivator (cost): it is more expensive to transport a bit over a 1000km than over 100km. This is positive for the network operator and for the user, who will then be able to enjoy their respective services at a lower cost. In addition, because edge clouds will have a small to medium size, the granularity of both capital and operational costs will be reduced per data center; which will allow for much milder – and progressive – investment approaches.

Third motivator (security and protection): data stays geographically local; which means that critical applications (e.g. military) or sensitive content (e.g. biological, pharmaceutical, financial), could be run/stored on DCs within trustworthy infrastructures and/or selected territories.

Fourth motivator (traffic control): Edge-based networking allows to minimize the number of network hops that hinder the ability to control and engineer the traffic; which is tightly linked to the second, and last, strategic measure towards the implementation of a full compliant Edge Cloud. Because when time-to-transport shrinks, data processing/swicthing/queuing and network reconfiguration become dominant concerns.

From statistical packet multiplexing, to deterministic dynamic networking.

Current data centers rely mainly on Ethernet technology and hierarchical topology, where the racks of servers are equipped with a first aggregation Ethernet switch followed by additional higher-layer switches which are employed to provide full connectivity. Even for tier-1 DCs, these multi-stage architectures induce an aggregate end-to-end latency in the order of milliseconds depending on the number of flows and network load; but most importantly, the statistical approach to multiplex the data before transmission makes of latency an effectively random metric – hence uncontrollable.

In order to provide satisfactory quality of service for all new applications, we must first be able to control time. It does not matter if it comes in the form of bitrate, time jitter, turn-up/-down time, or latency itself; guaranteeing the quality of service requires the network to feature the necessary hardware and software mechanisms to engineer time inside and in-between data centers. Here is a quantitative comparison that puts in perspective the kind of control and progress that is required in the upcoming years:

Centralized/Core vs. Edge Cloud:

- Bandwidth per application: 10s of Mbps vs. Gbps

- Latency sensitivity : >50ms vs. <1ms

- Time jitter sensitivity: 10s of μs vs. <100ns

- Turn-up/-down time: 1s-1h vs. 1ms-1s

Needless saying that there is great interest from industry – in turn triggering research and academia – to define the stack of protocols, and network architecture, and technologies that will enable such performance; and whereas a handful of proposals have already been presented around concrete elements, and for specific applications – e.g. PROFINET, or FlexE, the problem still eludes a complete satisfactory solution.

I guess it is a matter of time…

References

– B. Evans, “Why Microsoft Is Ruling The Cloud, IBM Is Matching Amazon, And Google Is $15 Billion Behind,” in Forbes, 2018 [Online]. Available: https://www.forbes.com/sites/bobevans1/2018/02/05/why-microsoft-is-ruling-the-cloud-ibm-is-matching-amazon-and-google-is-15-billion-behind/#680813b61dc1

– M. K. Weldon, The Future X Network: A Bell Labs Perspective (2016), Taylor & Francis Group.

– N. Benzaoui et al., “CBOSS: Bringing Traffic Engineering Inside Data Center Networks,” in J. Opt. Commun. Netw., vol. 10, pp. 117-125, 2018

– J. M. Estarán et al., “Cloud-BOSS Intra-Data Center Network: On-Demand QoS Guarantees via μβ Optical Slot Switching,” in proc. of European Conference on Optical Communication (ECOC), 2017

– Nokia Internal

– FlexE Design Team, “Flexible Ethernet (FlexE) Deep Dive,” white paper/presentation, 2017 [Online]. Available: https://datatracker.ietf.org/meeting/98/materials/slides-98-ccamp-102-flexe-technology-deep-dive/

– PROFINET team, “PROFINET, Industrial Ethernet for advanced manufacturing,” in official website [Online]. Available : https://us.profinet.com/technology/profinet/

One comment on “Core Cloud to Edge Cloud: It will take Time”

Comments are closed.

A matter of time – as perfectly described in the post! So let’s think about how we could group uses cases that will benefit from edge compute resources and what does the technological change here mean. Things that come to mind here are:

– Performance enhancements

– Cost Reductions

– Scalability

– Regulations

Higher bandwidth and lower latency improve the performance of immersive video applications. Lower latency enables critical machine type remote operations. On the cost reduction side, the edge will enable more efficient content delivery. Those locations that have the network built first will see the next wave of innovation first.